Although these three subtasks have to be solved in any case, most algorithms create solutions without explicitly addressing the necessary steps. Selection of the appropriate subset of variables (feature selection)ĭiscovery of the best suited model structure containing these variablesĭetermination of optimal parameter values of the modelĮach of these subtasks depends on the results of the previous subtask to generate optimal solutions, therefore improvements of one task can lead to improvements to the whole algorithm. Generally speaking, achieving high-quality solutions in GP-based symbolic regression requires solving three interrelated subtasks: In some cases, this can lead to necessary building blocks becoming extinct in the population before they are combined in a solution and thus recognized by the algorithm.

However, as a biologically-inspired approach guided by fitness-based selection, the GP search process for symbolic regression is characterized by a loose coupling between fitness, expressed as an error measure with respect to the target variable, and variation operators | subordinate search heuristics in solution space that generate new models in each generation.Ĭonsequently, it is difficult to foresee the effects on model output when variation operators perform changes on the model structure, often leading to situations where promising model structures are ignored by the algorithm due to low fitness caused by ill-fitting parameters . The ability to simultaneously search the space of possible model structures and their parameters (in terms of appropriate numerical coefficients) makes genetic programming (GP) a popular approach for symbolic regression. Symbolic regression is the task of finding a mathematical model that best explains the relationship between one or more independent variables and one dependent variable. Genetic programming with nonlinear least squares performs among the best on the defined benchmark suite and the local search can be easily integrated in different genetic programming algorithms as long as only differentiable functions are used within the models.

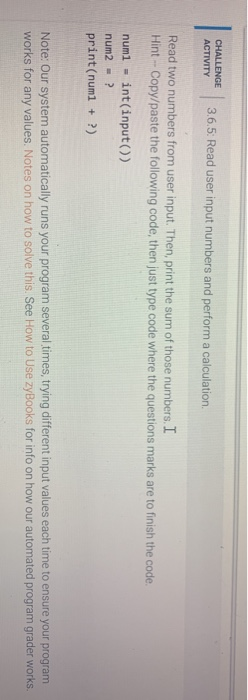

Our results are compared with recently published results obtained by several genetic programming variants and state of the art machine learning algorithms. Using an extensive suite of symbolic regression benchmark problems we demonstrate the increased performance when incorporating nonlinear least squares within genetic programming. We provide examples where the parameter identification succeeds and fails and highlight its computational overhead. We employ the Levenberg–Marquardt algorithm for parameter optimization and calculate gradients via automatic differentiation. In this paper we analyze the effects of using nonlinear least squares for parameter identification of symbolic regression models and integrate it as local search mechanism in tree-based genetic programming.

0 kommentar(er)

0 kommentar(er)